Future AI Product: Perfect Watermelon Detector

Make the world a sweeter place 🍉

Back story

One of my fondest childhood memories is eating watermelons in summer. The sun was shining, the air was hot and still, and the cicadas had been chirping forever. The fan in the living room was working tirelessly. My parents would buy us watermelons. The good ones. As soon as the watermelon was cut open, my eyes got cooled, then I let it cool my stomach and also my mind.

But I have never been a good watermelon picker myself. Many times, I bought a watermelon where the flesh was not red but pale, and I had to throw it away. Every summer, when I pass by the watermelons in the supermarket, the same question comes to mind: How can I pick a good watermelon?

The rise of MLLMs

Back in 2023, the advent of Multimodal Large Language Models (MLLMs) changed the way we interact with AI and brought new hope to watermelon lovers like me. MLLMs accept not only text, but also audio and images as input, providing almost human-level understanding.

When experienced people pick a watermelon, they check the color and skin of the fruit. They also tap the watermelon slightly and listen to the sound because a ripe watermelon has a special density. All these tips and tricks are based on pattern recognition, which is exactly the strength of MLLMs. So, it’s entirely possible to train an MLLM to pick a perfect watermelon precisely.

Collect the data

The idea was clear: we would use an open-source MLLM as the foundation and fine-tune it to detect good watermelons. However, to achieve this, we needed high-quality data. Unfortunately, although we have tons of selfies on the Internet, the specific watermelon data we needed was not available.

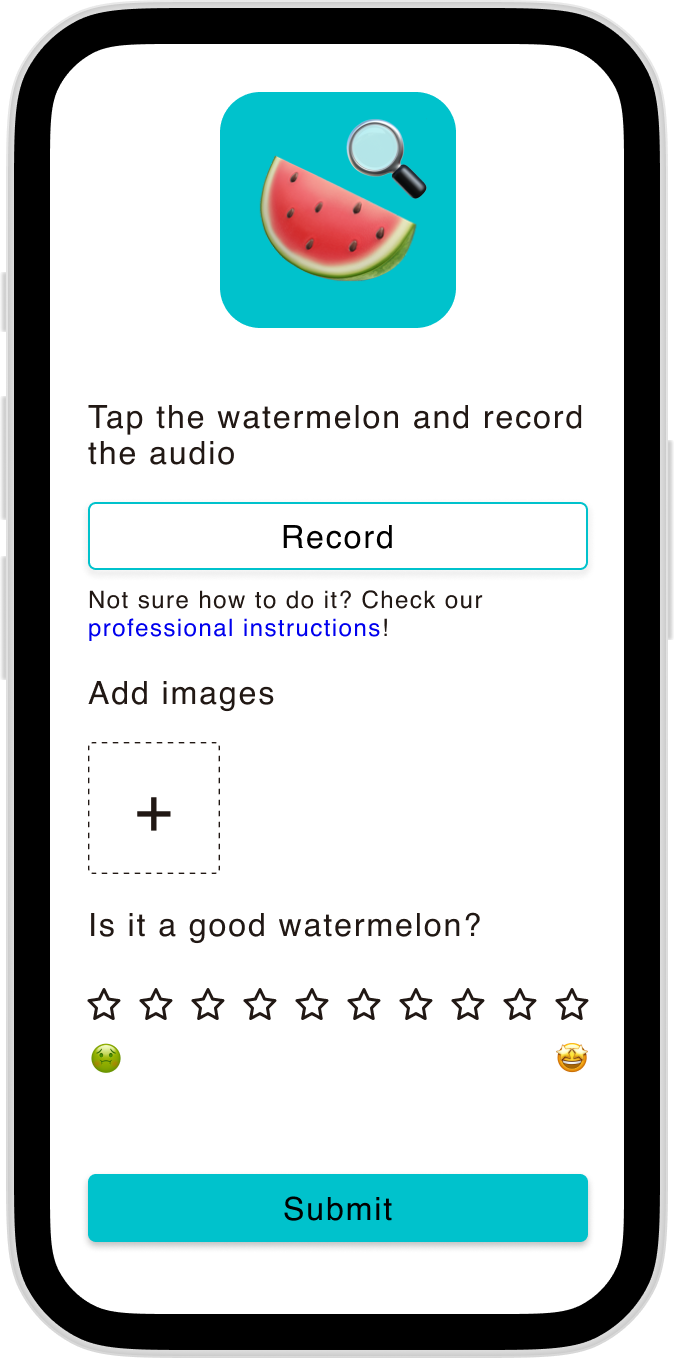

We had to collect the data from scratch. We quickly developed an app and hoped to find a large community willing to help us. Deep in our hearts, We believed that we were not the only ones who wanted to eat good watermelons in the summer.

Train the WLLM

Not surprisingly, our app was widely welcomed by watermelon lovers around the globe. We received high-quality data daily from our community and began fine-tuning our Watermelon Large Language Model (WLLM). We also secured the first round of funding from a tech billionaire, who wished to remain anonymous because people think eating watermelons is not elegant. (When will the world be inclusive enough?)

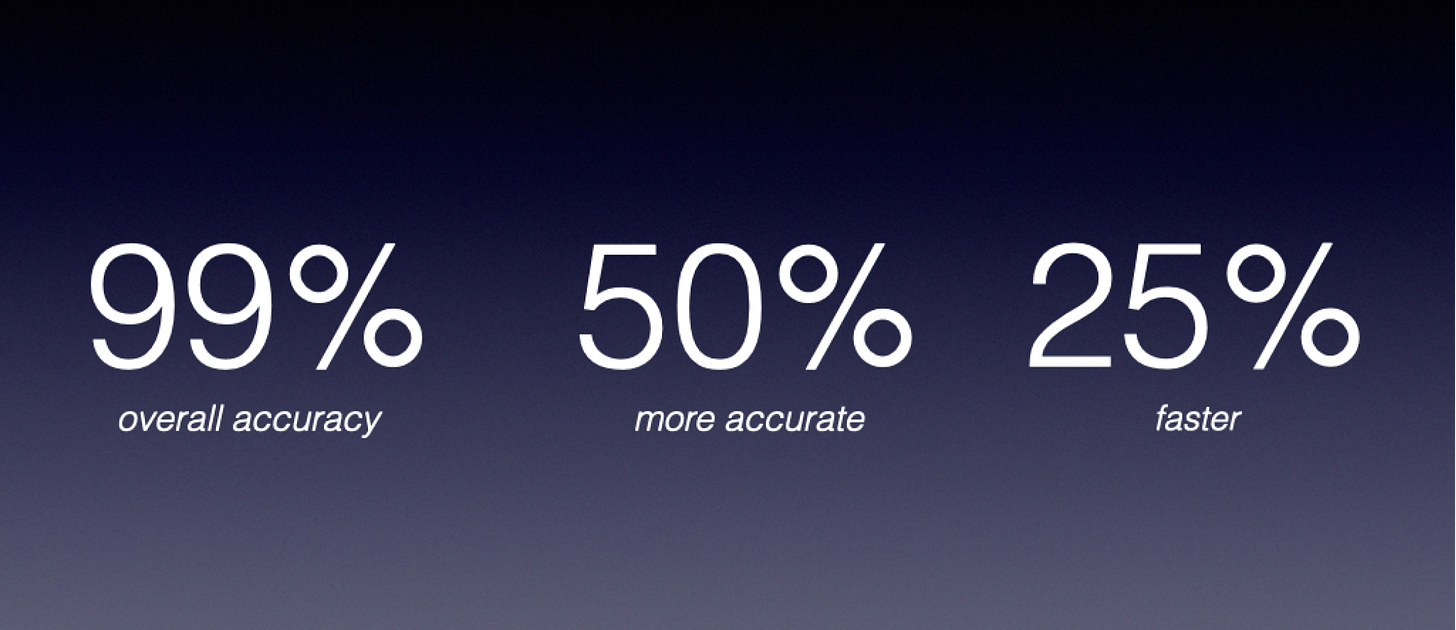

First Watermelon Picking Championship

In mid-2025, confident in the performance of our WLLM, we decided to hold the first Watermelon Picking Championship in Madrid, Spain. Many experienced watermelon pickers flew to Madrid and competed against each other and the WLLM. The audience was excited to watch the championship and enjoy the free watermelons after their siesta.

After two days of competition, hundreds of watermelons were eaten, and the result was clear. It was time to release the product.

On all your devices

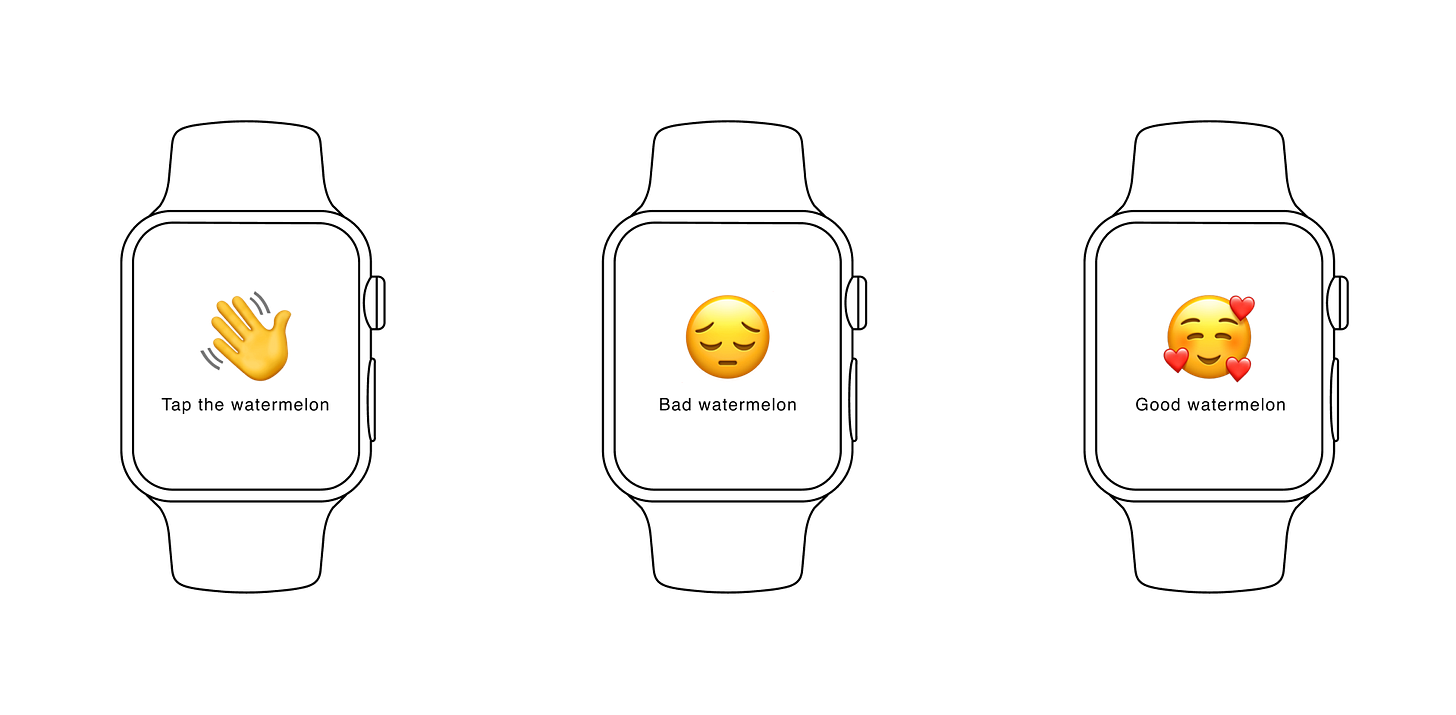

At the end of 2025, we launched our WLLM on all popular devices, such as smartwatches and eyeglasses. After checking the sound or the skin of the watermelon, or both, people would receive simple feedback indicating whether it’s a good watermelon or not.

By August 2026, millions of people had used our product and it was a great pleasure for us to help them enjoy good watermelons. Yes, AI doesn’t have to be cool robots; it can also be as simple as making the world a bit sweeter.